Your system is not stable, it's outdated

One thing that I as an Arch user1 have heard more often than I can count is that Archlinux is unstable, as opposed to Debian and Ubuntian2. And to this day, I have made a mental journey from the classic “no, my system isn’t unstable, you are, you damn sid3 user!” to a (hopefully) more nuanced viewpoint.

This notion of stability™ that is touted by many as one of, if not the most important aspect of a Distro has nothing to do with what we IT-folk usually mean when we speak of stability, that is: uninterrupted service. Instead it is – at least in my humble opinion – a reason to do a worse job at maintaining a system. But I’ll expand on that later.

Arch is unstable

I have since come to agree that Arch is unstable. If you look through the Announcements for Archlinux, and look at how many updates require manual intervention. I would argue that it’s not excessive for over 20 years of news, but it certainly is more than Debian needs in the same amount of time. Is that a bad thing? Not necessarily. For my daily drivers (Laptop and PC) these occasional manual interventions are basically irrelevant. When my system stopped working, it was pretty much always a non-Arch-related aspect that broke (the thumb-drive with the LUKS-key dying, an idiot trying manually managed Secureboot).

Of course, I have subscribed to the Arch announcement mailing list, so I get a mail each and every time an issue is known (and they are usually known already, Arch also has a testing branch where these issues are caught). If you do not, Arch can hurt you, if you have not set up your system in a way to specifically bypass these issues4.

These issues might be minor for 3 or 4 machines, but imagine doing this with 20 or even hundreds of machines. Then this becomes an issue quickly.

Configs matter!

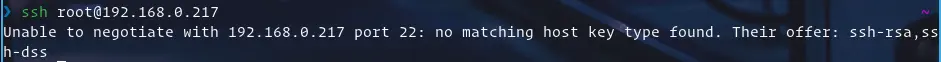

So acknowledging that, why would I accuse of some admins of doing an incomplete

job at maintaining their systems? Because, in my opinion, maintaining a system

is more than just logging in once a year, running sudo apt update && sudo apt upgrade. An important part – if not the most important part – of running a

secure server is maintaining it’s config files. How would you explain this, if

not with a system that is woefully out-of-date with config files to match?

It’s not getting better

To me this is tech-debt. Sure, it may take half an hour to update the config which you don’t have. Would you rather it to be 2 days in a few years? It’s common knowledge that tech-debt does not scale linearly. Now multiply this with 10 services. Do 100. When’s the point where it’s too much to do?

No downtime

How much downtime can this system have? The answer is almost always “none, of course”. Even ignoring the security issues (or rather pushing them to their own section), when a system must not have any downtime because it is mission-critical, then you probably have a backup. Update the backup, make it the main, update the original main-machine. Don’t have that? So how “stable” (as in structurally sound) is your setup then? I don’t have a hot-backup for my servers, ready at a moment’s notice, but I have a tested backup.

EoL Software

Software versions aren’t some fine wine that gets better with age. There is a point at which you shouldn’t use it anymore: End of Life or End of Support. Fortunately the software dealer of choice got you a nice fix of extended product support. You’ll just do the migration (because at a certain extent it becomes easier to set up a new system than updating the existing one) during that period, right? Yeah, sure you are. You haven’t kicked it down the road for 5 years now. You’ll probably get it done by the end of the month. Who are you kidding?

System goes poof

How does one make a backup? Exactly, just make a snapshot of the VM. While that is a valid way of backing up a system, it is in my experience way easier to restore broken-beyond-repair systems from a file-backup. This also happens to make one terrible thing so scary that you’ll avoid it like the plague:

Who knows if it will boot again?

Go to a server and run sleep 60; sudo reboot -f. How certain are you that the

server is coming back up? 50%? Less? Is the adrenaline shooting through your

system while waiting for the invisible clock to run out? Come on, 60 seconds,

how long can that feel. Very long, if you don’t know if a mission-critical

system will come back up. Is that system stable, or is it more like XKCD

#2347?

Not to brag, but if I run that command on one of my servers, I am confident that it will be back up within seconds. It may take manual intervention if I forgot a service, but only once. Sure, my servers are nowhere close to being as important as some core infrastructure in a company, but to me, it feels like the heart-attack inducing reboot should be the “I’m doing this on the side because it’s fun”-system and not the one with multiple admins that are paid thousands.

Security

Security is important. That’s why it’s better to stay with one version that has received all the security updates and none of the potentially bug-infested new code, right? I actually agree with this. As long as the maintainers of a project port fixes, this is a valid approach for critical systems. It’s been more than once that serious issues were fixed in a .1, and it would be insane to not acknowledge that. At the same time, new security features might be added that would enhance your system’s security. There is no right answer here, and I think it would be unprofessional to give one as a blanket statement.

Bugports

Backports are an impressive display of dedication even more so if the maintainers have already EoL’d a version. At that point the backporting is (usually) done by volunteers and for large projects like Debian this is an absolutely impressive feat and I applaud every member of the backport teams. They have thwarted countless attacks on systems that should’ve been updated to a supported version ages ago.

Despite these impressive efforts, two things must not be underestimated: the potential for new bugs and CVE-less security fixes. No matter how good the developers doing the backporting are, it is impossible to say if some of the backports themselves contain bugs, potentially security relevant ones. Additionally, not every security related bug is assigned a CVE. Probably nor even close. Those will not pop up on the backporter’s radar and will remain unfixed.

How to do it better?

Consider standing up to your boss, if they prevent you from doing your job and this issues bug you. Aside from that: update your shit! Sure, that might be one hell of a workload, but you may have put it on the back burner long enough now. At some point even things on the back burner start charring. Take it off before that happens.

I might make a post about what my systems5 look like, and I will link it here unless I forget. Remember: It’s not the uptime that counts most, but the system’s health. Uptime is merely a metric.

It is not bright colors but good drawing that makes figures beautiful.

— Titian

As always, please send your fan-mail, comments, or suggestions for improvement to the email address listed below. That way everyone can participate.

mandatory “I am using Arch, btw” ↩︎

It’s basically Debian with extra Snap. But hey, at least the versions are frozen more regularly. ↩︎

Debian’s Testing Repo, if you didn’t know. Basically rolling-release Debian. ↩︎

Boy was I glad to boot an EFISTUB when this announcement came. ↩︎

At that point, you can judge if my setup is better™. ↩︎